|

I am a researcher at OpenAI. I am broadly interested in AI and robotics. I completely my PhD at UC Berkeley, advised by Professor Ken Goldberg, where I was a member of the AUTOLAB, which is part of the Berkeley Artificial Intelligence Research (BAIR) Lab. I was supported by the NSF Graduate Research Fellowship, and I have also been fortunate to intern at NVIDIA. Prior to that, I did my undergraduate at UCLA with double majors in Applied Math and Statistics, where I was advised by Professor Jungseock Joo. Email / CV / Google Scholar / LinkedIn / Twitter |

|

|

I'm broadly interested in robot learning, manipulation, and perception. My PhD research focuses on improving the robustness and generalization of robot policies through data and model scaling, data augmentation, and leveraging simulation data. Here are some of my research papers. |

|

Guanhua Ji*, Harsha Polavaram*, Lawrence Yunliang Chen*, Sandeep Bajamahal, Zehan Ma, Simeon Adebola, Chenfeng Xu, Ken Goldberg Preprint PDF / Website / Code / Dataset A systematic study of scaling robot augmentation and a large open-source dataset that augments OXE with 9× more robot embodiments across 16 datasets, comprising over 4.4M trajectories. |

|

Abhiram Maddukuri*, Zhenyu Jiang*, Lawrence Yunliang Chen*, Soroush Nasiriany*, Yuqi Xie, Yu Fang, Wenqi Huang, Zu Wang, Zhenjia Xu, Nikita Chernyadev, Scott Reed, Ken Goldberg, Ajay Mandlekar†, Linxi "Jim" Fan†, Yuke Zhu† Robotics: Science and Systems (RSS), 2025 PDF / Website A simple recipe for co-training simulation and real data for robot manipulation. |

|

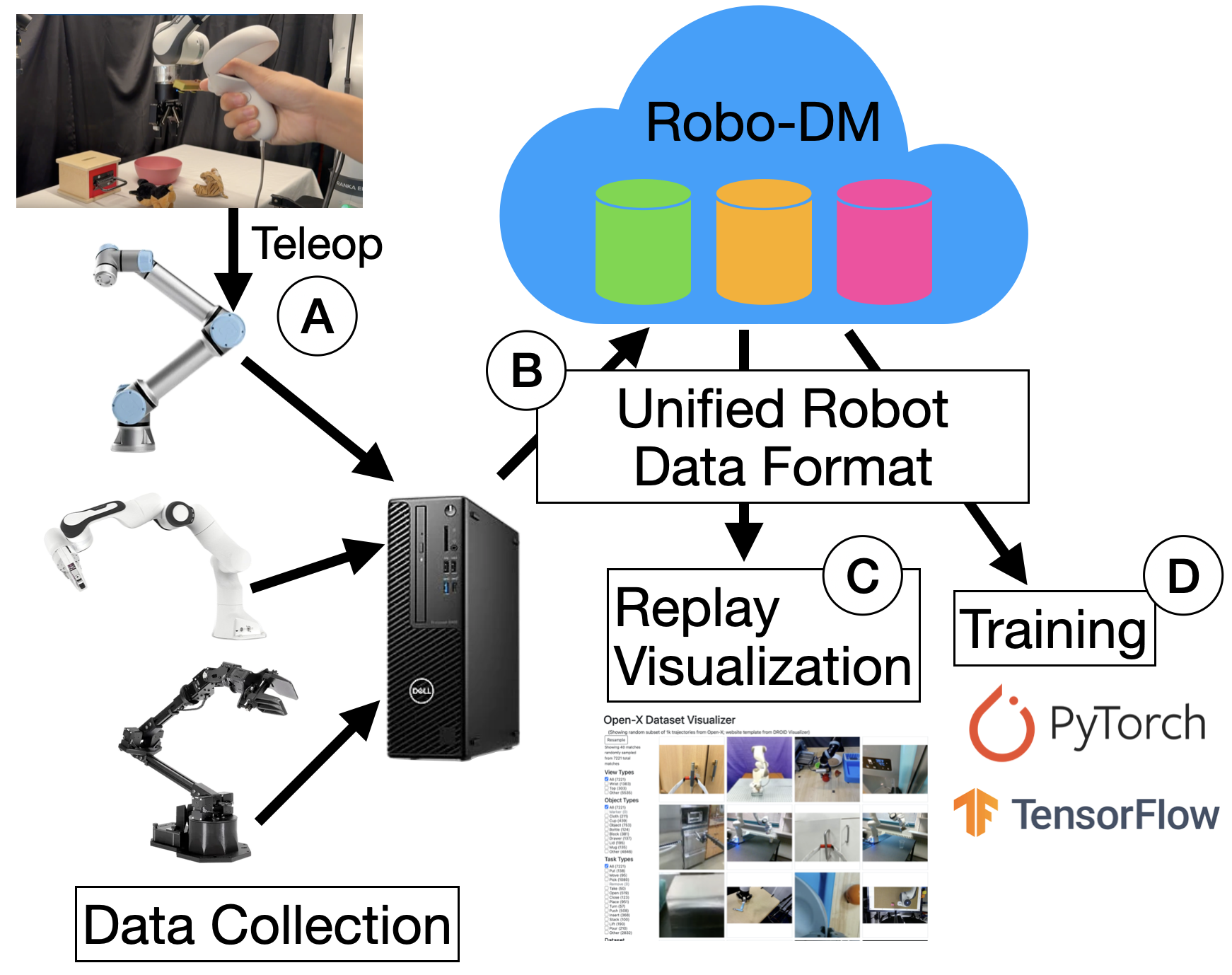

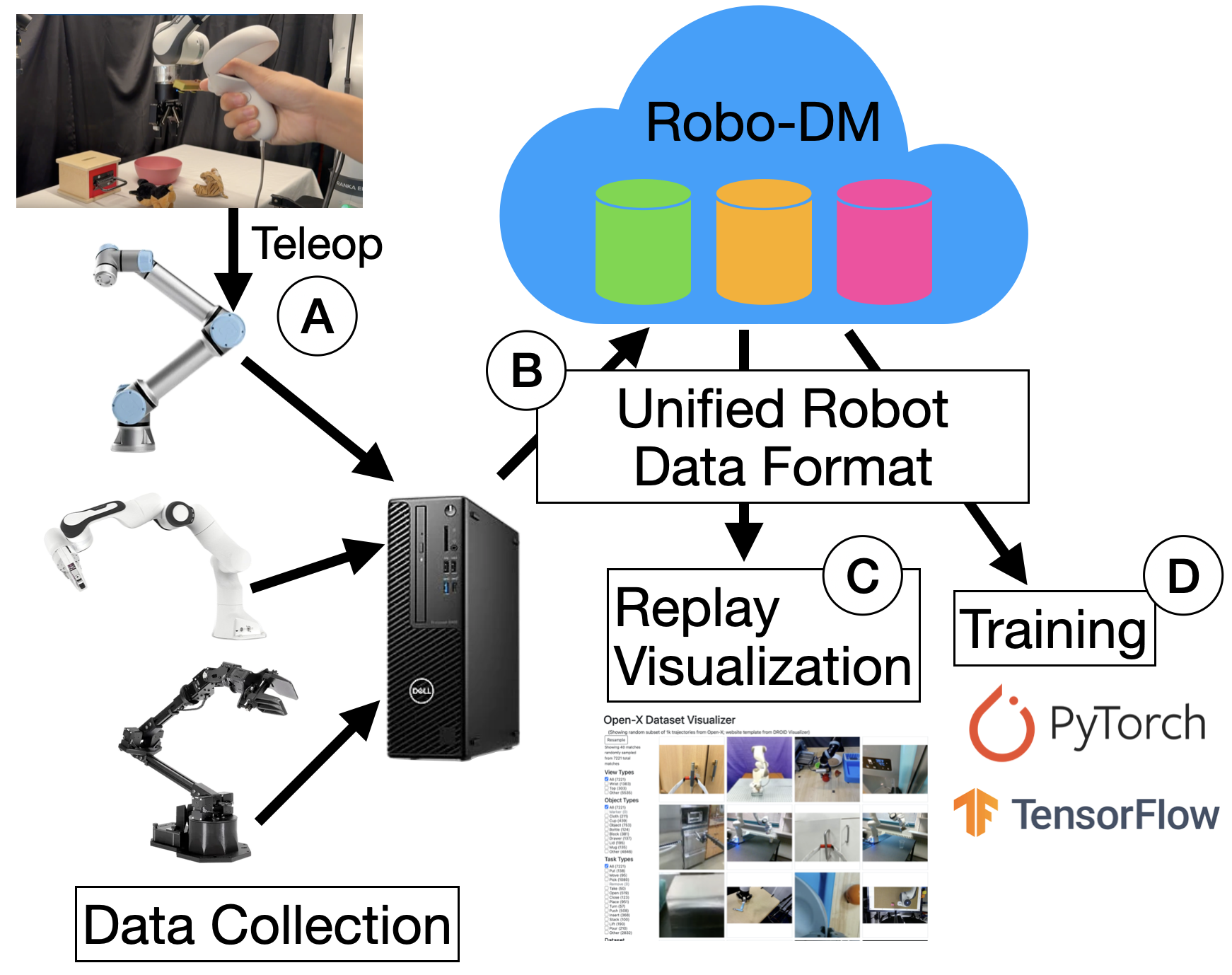

Kaiyuan Chen, Letian Fu, David Huang, Yanxiang Zhang, Lawrence Yunliang Chen, Huang Huang, Kush Hari, Ashwin Balakrishna, Ted Xiao, Pannag R Sanketi, John Kubiatowicz, Ken Goldberg IEEE International Conference on Robotics and Automation (ICRA), 2025 (Best Paper Award on Robot Learning) PDF / Code A cloud-based toolkit that optimizes robot data storage and loading using EBML/MKV, achieving up to 70x compression and 50x faster loading with minimal impact on training performance. |

|

Letian Fu*, Huang Huang*, Gaurav Datta*, Lawrence Yunliang Chen*, William Chung-Ho Panitch, Fangchen Liu, Hui Li, Ken Goldberg IEEE International Conference on Robotics and Automation (ICRA), 2025 PDF / Website / Code / Dataset A robot policy that learns new tasks by prompting with robot trajectories and without any fine-tuning. |

|

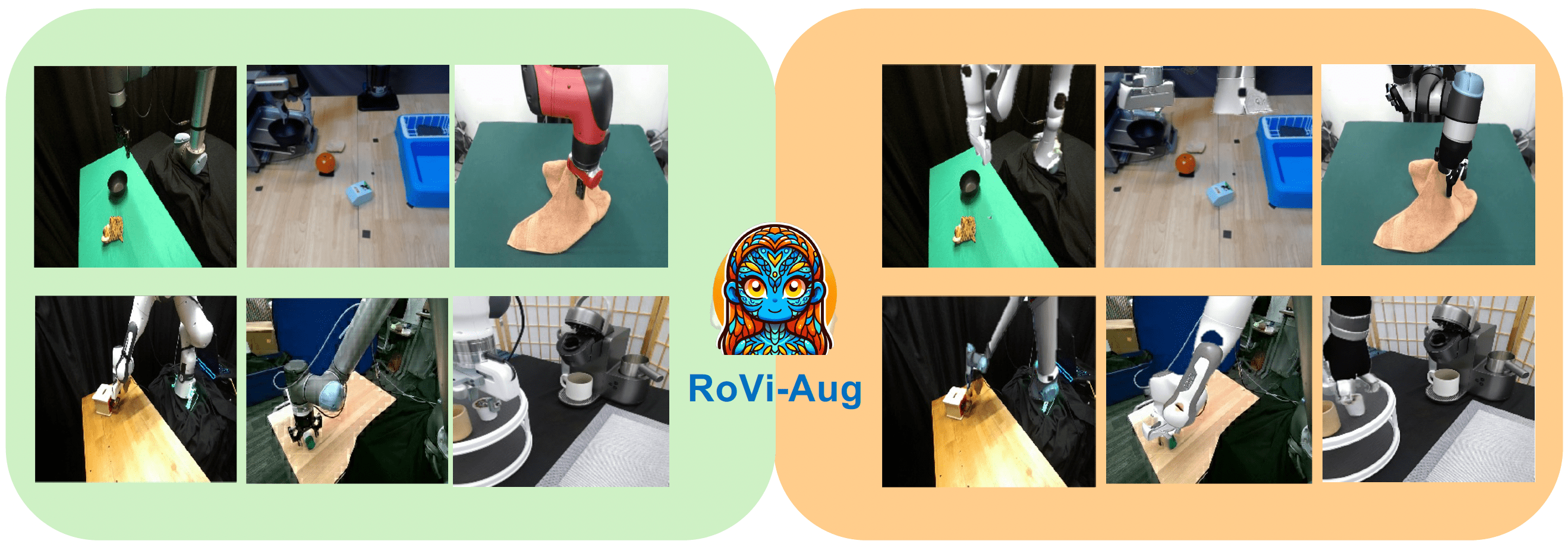

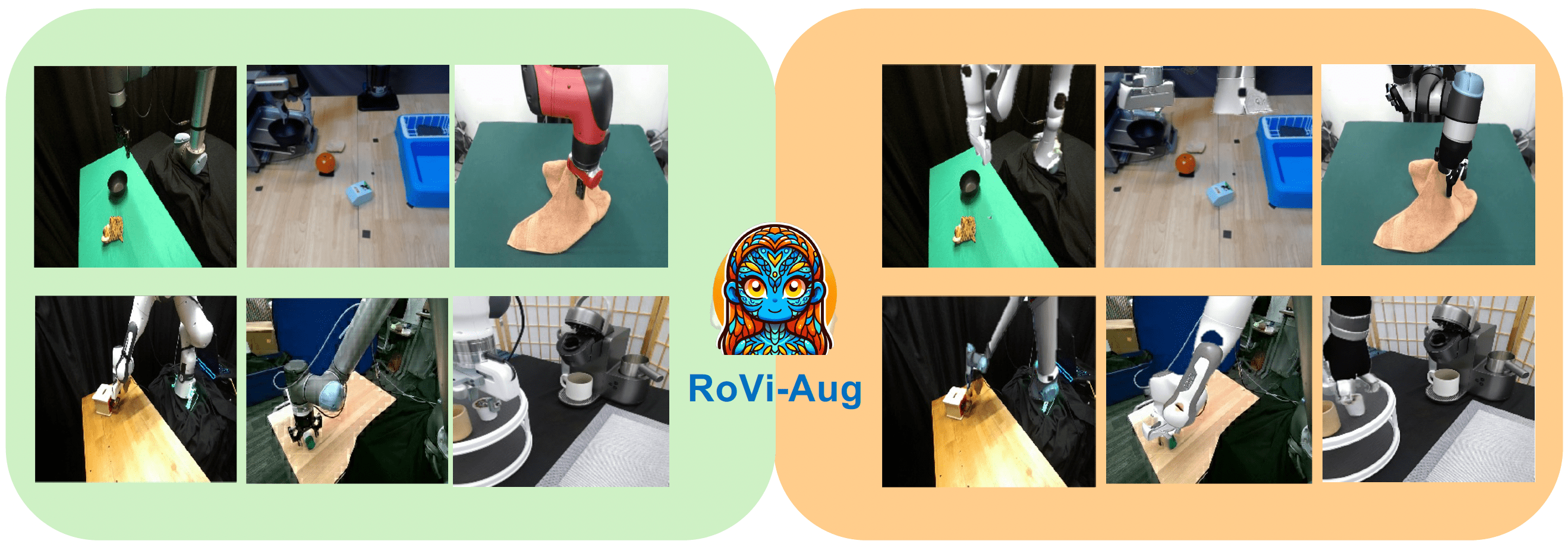

Lawrence Yunliang Chen*, Chenfeng Xu*, Karthik Dharmarajan*, Zubair Irshad, Richard Cheng, Kurt Keutzer, Masayoshi Tomizuka, Quan Vuong, Ken Goldberg Conference on Robot Learning (CoRL), 2024 (Oral Presentation, 4.3%) PDF / Website / Press / Code A data augmentation pipeline that uses diffusion models to generate novel robots and camera viewpoints. |

|

Lawrence Yunliang Chen*, Kush Hari*, Karthik Dharmarajan*, Chenfeng Xu, Quan Vuong, Ken Goldberg Robotics: Science and Systems (RSS), 2024 PDF / Website / Code Zero-shot transfer a visuomotor policy trained on one robot to unseen robot embodiments by cross-painting the images. |

|

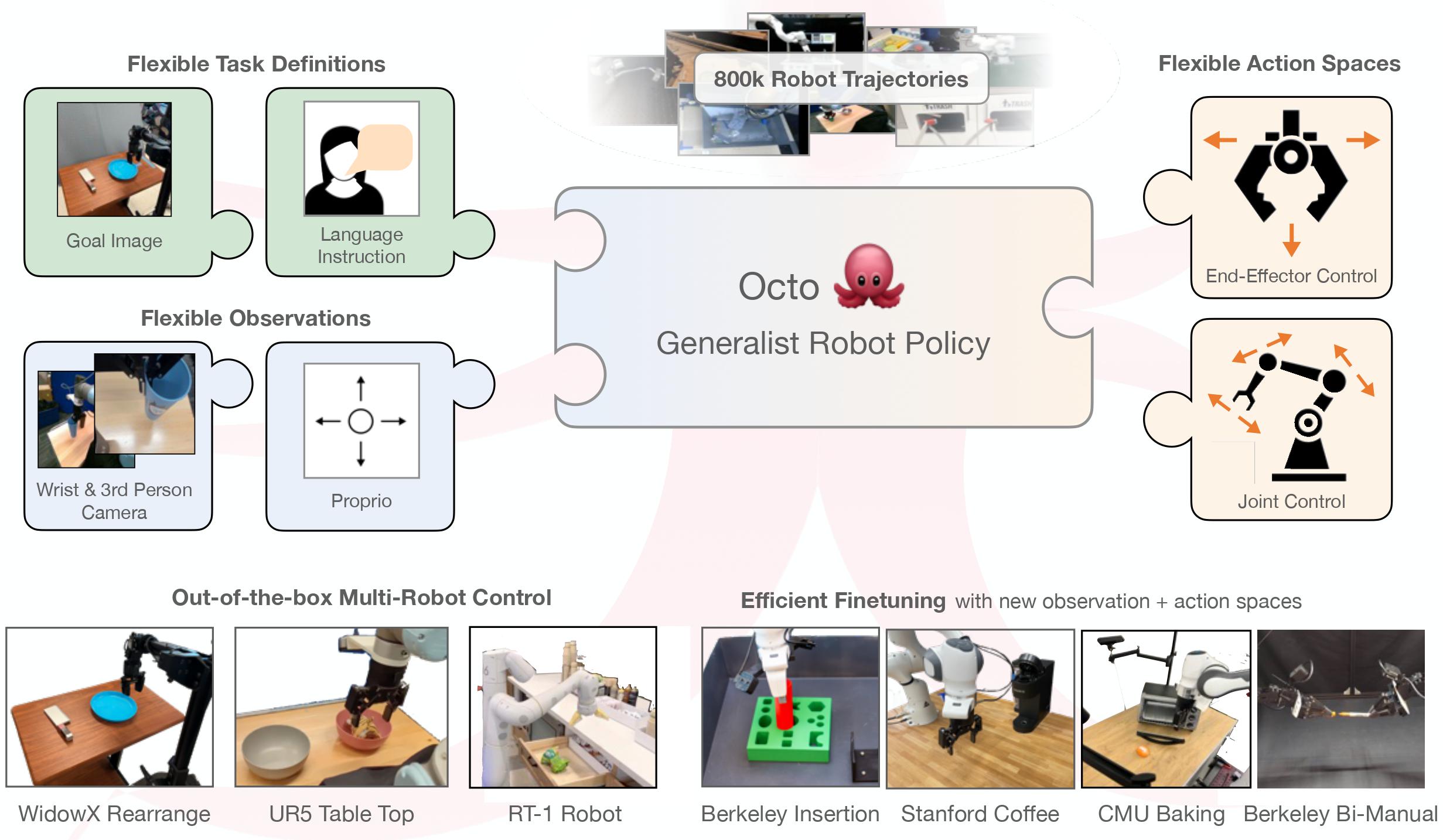

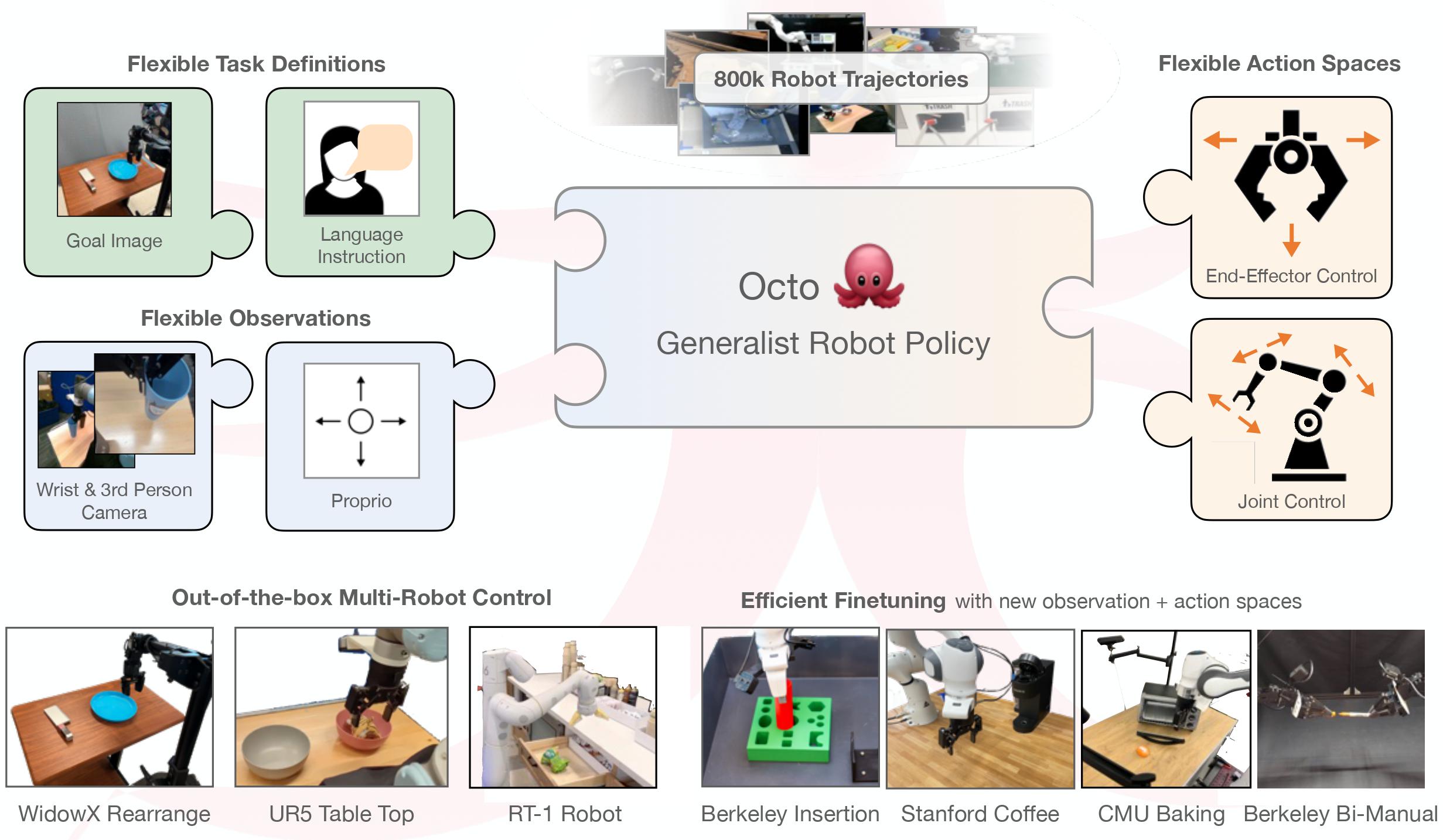

Dibya Ghosh*, Homer Walke*, Karl Pertsch*, Kevin Black*, Oier Mees*, Sudeep Dasari, Joey Hejna, Tobias Kreiman, Charles Xu, Jianlan Luo, You Liang Tan, Lawrence Yunliang Chen, Pannag Sanketi, Quan Vuong, Ted Xiao, Dorsa Sadigh, Chelsea Finn, Sergey Levine Robotics: Science and Systems (RSS), 2024 PDF / Website / Code An open-source generalist robot policy trained on a mixture of 25 datasets from the Open X-Embodiment dataset. |

|

Alexander Khazatsky*, Karl Pertsch*, Suraj Nair, Ashwin Balakrishna, Sudeep Dasari, Siddharth Karamcheti, Soroush Nasiriany, Mohan Kumar Srirama, Lawrence Yunliang Chen, ..., Osbert Bastani, Glen Berseth, Jeannette Bohg, Ken Goldberg, Abhinav Gupta, Abhishek Gupta, Dinesh Jayaraman, Joseph J Lim, Jitendra Malik, Roberto Martín-Martín, Subramanian Ramamoorthy, Dorsa Sadigh, Shuran Song, Jiajun Wu, Michael C. Yip, Yuke Zhu, Thomas Kollar, Sergey Levine, Chelsea Finn Robotics: Science and Systems (RSS), 2024 PDF / Website / Hardware Code / Policy Learning Code A dataset that contains 76k demonstration trajectories or 350 hours of interaction data, collected across 564 scenes and 84 tasks by 50 data collectors over the course of 12 months. |

|

Open X-Embodiment Collaboration. IEEE International Conference on Robotics and Automation (ICRA), 2024 (Best Paper Award) PDF / Website / Blog Post / Code / Data We introduce the Open X-Embodiment Dataset, the largest open-source real robot dataset to date. We train two models on the robotics data mixture: RT-1-X and RT-2-X. |

|

Adam Rashid*, Satvik Sharma*, Chung Min Kim, Justin Kerr, Lawrence Yunliang Chen, Angjoo Kanazawa, Ken Goldberg Conference on Robot Learning (CoRL), 2023 (Oral Presentation, 6.6%) (Best Paper Finalist) PDF / Website / Code / Data Given a natural language query, uses LERF based on CLIP and DINO features to perform zero-shot semantic grasping of object parts. |

|

Satvik Sharma*, Huang Huang*, Kaushik Shivakumar, Lawrence Yunliang Chen, Ryan Hoque, Brian Ichter, Ken Goldberg Conference on Robot Learning (CoRL), 2023 PDF / Website Uses VLMs and LLMs to create semantic distributions that can be integrated into downstream mechanical search policies. |

|

Lawrence Yunliang Chen, Baiyu Shi, Roy Lin, Daniel Seita, Ayah Ahmad, Richard Cheng, Thomas Kollar, David Held, Ken Goldberg IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023 (Best Industrial Robotics Research for Applications Finalist) PDF / Website An algorithm for grasping a single layer of plastic bags and fabrics using purely visual feedback, and a much-improved bagging algorithm. |

|

Lawrence Yunliang Chen, Baiyu Shi, Daniel Seita, Richard Cheng, Thomas Kollar, Ken Goldberg IEEE International Conference on Robotics and Automation (ICRA), 2023 PDF / Website A semantic representation of plastic bags and an algorithm for a bimanual robot to open a plastic bag from unstructured configurations, to insert object(s) into it, and then to lift the bag. |

|

Ryan Hoque, Lawrence Yunliang Chen, Satvik Sharma, Karthik Dharmarajan, Brijen Thananjeyan, Pieter Abbeel, Ken Goldberg Conference on Robot Learning (CoRL), 2022 (Oral Presentation, 6.5%) PDF / Website / Code A formalism, several new algorithms, and a benchmark for interactive fleet learning: interactive learning with multiple robots and multiple humans. |

|

Lawrence Yunliang Chen*, Huang Huang*, Ellen Novoseller, Daniel Seita, Jeffrey Ichnowski, Michael Laskey, Richard Cheng, Thomas Kollar, Ken Goldberg The International Symposium on Robotics Research (ISRR), 2022 PDF / Website Given a new garment, quickly learn a fling action that can effectively smooth the garment with only one robot arm. |

|

Lawrence Yunliang Chen, Huang Huang, Michael Danielczuk, Jeffrey Ichnowski, Ken Goldberg IEEE International Conference on Automation Science and Engineering (CASE), 2022 (Best Student Paper Finalist) PDF / Website Optimize the arrangement of objects on a shelf to make them easier to retrieve and search. |

|

Vincent Lim*, Huang Huang*, Lawrence Yunliang Chen, Jonathan Wang, Jeffrey Ichnowski, Daniel Seita, Michael Laskey, Ken Goldberg IEEE International Conference on Robotics and Automation (ICRA), 2022 PDF / Website / Video / Press Using Real2Sim2Real to learn to manipulate a free-end cable so that the endpoint can accurately reach desired target positions on a planar surface. |

|

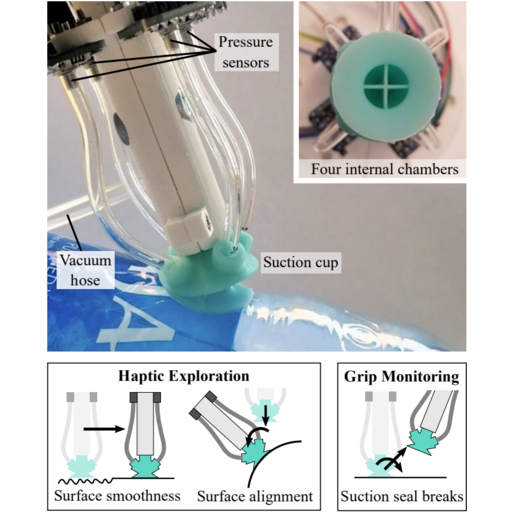

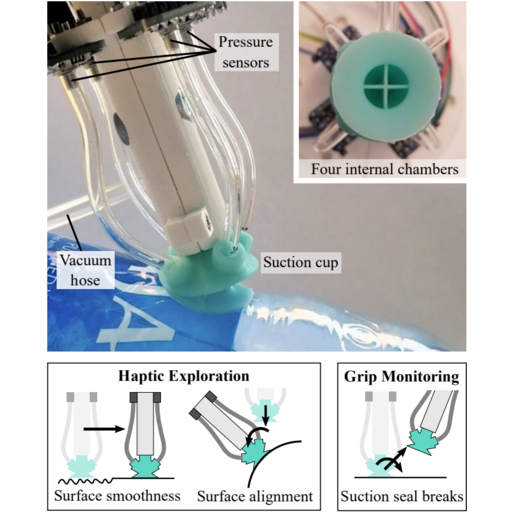

Tae Myung Huh, Kate Sanders, Michael Danielczuk, Monica Li, Yunliang Chen, Ken Goldberg, Hannah S. Stuart IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021 PDF / Video A new suction cup design that contains multiple chambers for gripping and haptic exploration. |

|

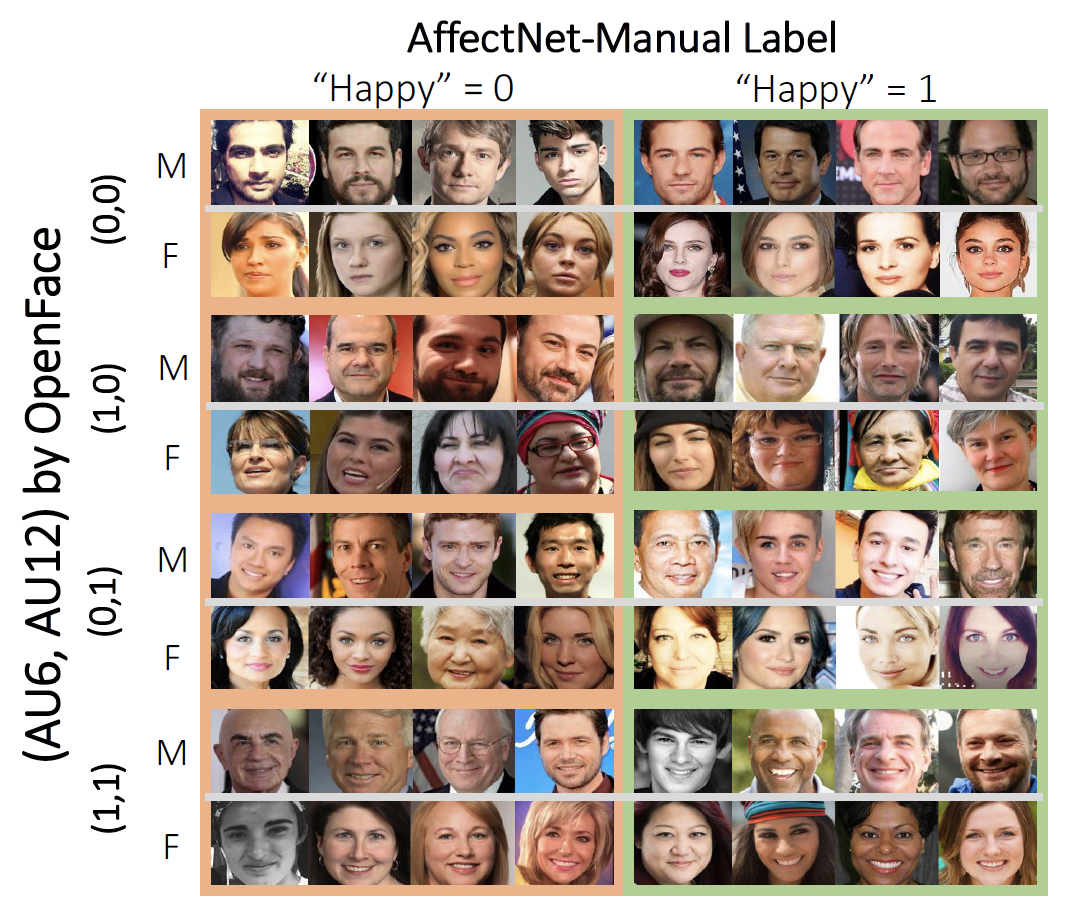

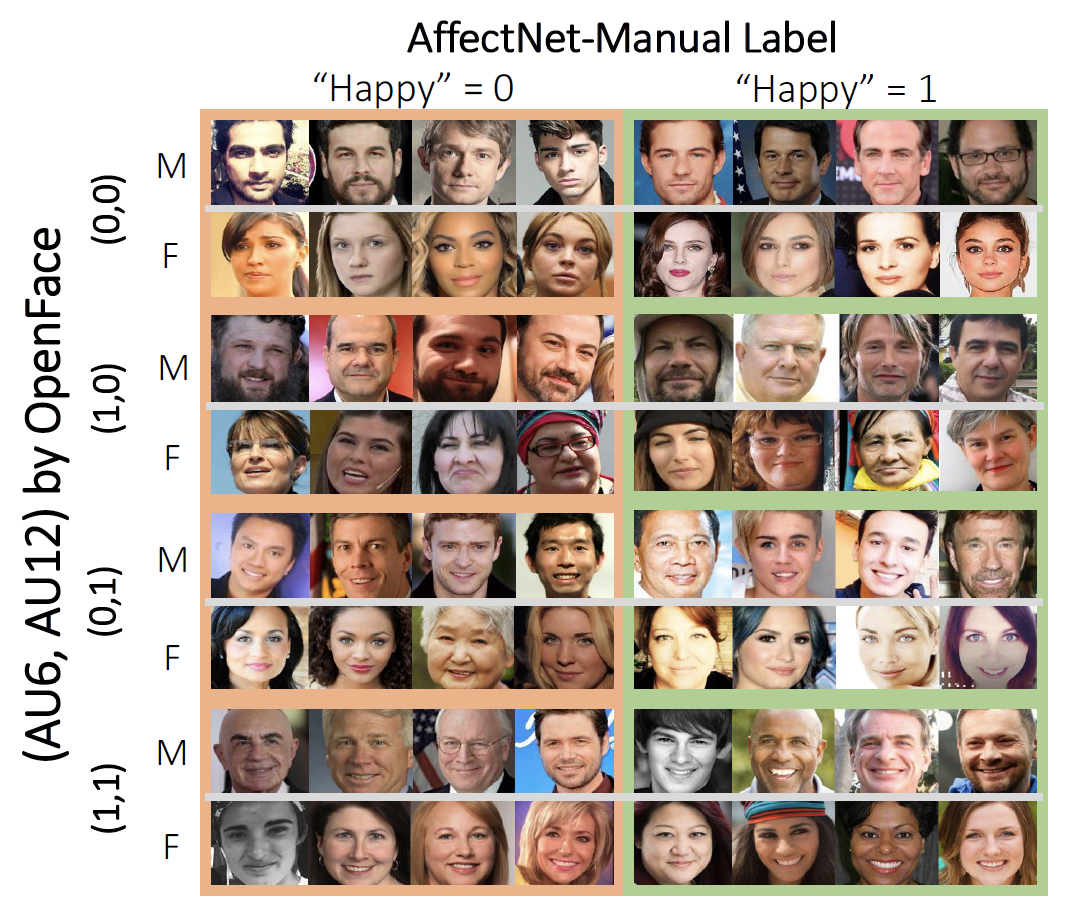

Yunliang Chen, Jungseock Joo IEEE/CVF International Conference on Computer Vision (ICCV), 2021 PDF (with appendix) / Code Analysis of annotation bias for many large public facial expression recognition datasets. |

|

|

|

Lawrence Yunliang Chen, Massimiliano de Sa, Satvik Sharma, Vainavi Viswanath Project advisor and sponsor: Jun Zeng Website / Code Berkeley EECS C106A/206A (Introduction to Robotics) Final Project |

|

|

|

Grassi Fellowship, 2023-24

Berkeley IEOR Department's endowed PhD fellowship, one awardee per year |

|

Katta G. Murty Prize for Best Paper in Optimization, 2023

For the paper "Optimal Shelf Arrangement and Rearrangement to Minimize Robot Retrieval Time," IEOR Department, UC Berkeley |

|

NSF Graduate Research Fellowship, 2022-25

NSF Graduate Research Fellowship Program (GRFP) |

|

Scholarship for the International Elite Summer School in Robotics and Entrepreneurship, 2022

Funded by the Danish Ministry of Higher Education and Science, Odense Municipality, the Innovation Centre Denmark, the Novo Nordisk Foundation, and private partners |

|

Chiang Fellowship for Graduate Scholars in Manufacturing and Engineering, 2020-21

UC Berkeley IEOR Departmental Fellowship |

|

|

| Reviewer: IJRR, IJCV, T-RO, RA-L, TPAMI, TIP, T-ASE, THRI, ICRA, IROS, CoRL |

|

Workshop Organizer:

5th Workshop: Reflections on Representations and Manipulating Deformable Objects @ ICRA2025 1st Workshop on X-Embodiment Robot Learning @ CoRL2024 4th Workshop on Representing and Manipulating Deformable Objects @ ICRA2024 |

|

|

|

Graduate Student Instructor, IEOR 215: Analysis and Design of Databases, Spring 2022

|

|

Website template from Jon Barron |